The International Conference on Computer Vision and Pattern Recognition (CVPR2025) will be held in Nashville, Tennessee, USA from June 11th to 15th, 2025. The conference is the top conference in the field of computer vision and pattern recognition organized by the Institute of Electrical and Electronics Engineers (IEEE), and is recommended as an A-class international academic conference by the Chinese Computer Society (CCF). It ranks second in the global academic publication list on the Google Scholar index, second only to Nature. The CVPR 2025 held this year received a total of 13008 valid submissions, of which 2878 were accepted, with an acceptance rate of 22.1%.

In the recently announced admission results of CVPR 2025, multiple latest research achievements from teachers and students of the School of Computer Science and Engineering at Tianjin University of Technology have been selected. The relevant brief introduction is as follows:

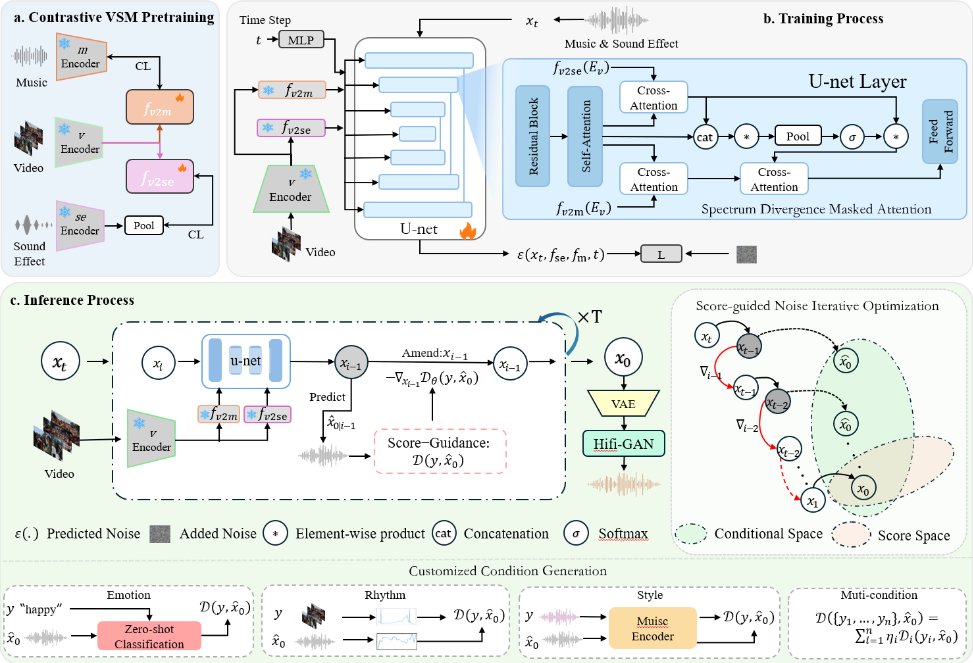

1.Customized Condition Controllable Generation for Video Soundtrack[1]

This paper was written by Associate Professor Qi Fan from our institute and his 2023 master's student Ma Kunsheng. This study conducts in-depth research on music and sound effects in the field of video music generation, and proposes an innovative video music diffusion model framework that utilizes spectral divergence mask attention and guided noise optimization. This framework integrates three core modules: visual sound music pre training, spectral divergence mask attention mechanism, and score guided noise iterative optimization, effectively mapping the modal information of music and sound effects to a unified feature space. Through this mechanism, the model significantly enhances its ability to capture complex audio dynamics while maintaining the unique characteristics of sound effects and music. In addition, even under the conditions of optimizing video music and without complex learning and training of video information, this method can still provide music creators with highly customizable control capabilities, making the music generation process more flexible and efficient.